How to choose the best cloud platform for AI

Explore a strategy that shows you how to choose a cloud platform for your AI goals. Use Avenga’s Cloud Companion to speed up your decision-making.

You’ve created a great digital product for your customers and they like the new user experience and interface. It wasn’t a walk in the park and the project took a lot of effort, but finally the product is here and it’s working great.

The performance is very good as well. You are seeing increased traffic and that makes you happy, and it’s good for the business.

But . . . let’s imagine that lots of this traffic is not coming from your customers but from bots. Automated digital worms that are eating your web application alive. They are consuming precious computing resources like CPU power and network bandwidth. And unfortunately, it is you who pays the cloud provider to enable these bots to scan your content and applications faster. Your customers suffer from the lower performance and responsiveness. The evil bots violate privacy and it is you who is unaware of it all. Your web application resources are overused by the bots and it renders your application unable to respond effectively to requests from your users. The bots create performance bottlenecks which are noticed by your customers.

It is a digital nightmare that has come true. So, what can you do?

Our Wao.io Web Performance Optimization Team has been obsessed with web performance for years; in a good way. They have helped hundreds of customers to optimize their web experiences with our consulting services and products (wao.io, couper.io).

In order to do that more and more effectively and to be able to respond to the ever changing digital landscape, our team is constantly researching new ideas and technologies.

One important research project is about analyzing suspicious web traffic and acting on it.

Web traffic is constantly analyzed by our team. They use rules created by the experts plus automation so as to take advantage of the latest machine learning technologies, including advanced neural networks and random forest decision trees.

The traffic is then classified into normal traffic and suspicious traffic. It’s a very complex process, requiring lots of expertise in this area, as well as tons of experimenting and trying to find the right . . . questions and classification decision criteria.

The bots routinely use the older versions of HTTP (1.1), that generate single requests repeatedly, and always ask for the same type of contents (mime type) but . . . not always 1.1, not always single requests, and sometimes even with an empty User-Agent string. To consider it as bot traffic, it has to repeat with a similar behavior pattern in order to allow the classification engine to make a reliable suggestion. We wish the rules were simpler, but they are not.

Different variables and parameters are taken into account to be able to process all this information properly and find which ones matter.

It’s not just like a blink of the letter (like in the Matrix movie), but there’s much more of it – sometimes even 90% of the traffic is unwanted traffic coming from the bots!

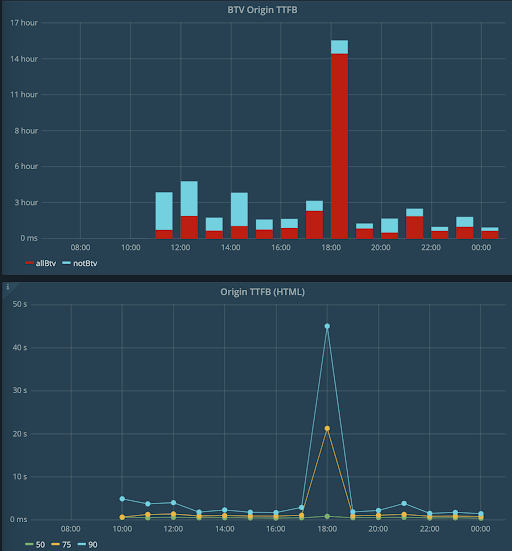

Our web performance optimization team shared the examples to help us visualize the impact.

This is how bots (the red part of the bars in the upper chart) affect the critical parameter of the web application performance called Time To First Byte (percentiles in the lower chart). Bots are invisible to most user tracking tools. In its irony, the bots that are to help with search ranking can actually hurt your rankings because of lowered performance and a resulting penalty for that from Google.

And even worse, the difficulty perspective of some bots are acceptable for particular business clients and unwanted for others.

* US and Canada, exceptions apply

Ready to innovate your business?

We are! Let’s kick-off our journey to success!