The most common types of AI models explained

February 3, 2026 13 min read

“The future is already here — it’s just not evenly distributed.” – William Gibson

The amount of compute required to train AI models has increased rapidly since 2010, 4.7x per year. This rapid rise in capability is not only about spending more money on larger compute clusters. Hardware and engineering are improving in ways we did not imagine. What used to take months of effort and millions of dollars now happens in days (sometimes hours). But just computing is not the whole story. If you put insufficient input data into a model, you get bad output; no hardware can fix it — training data matters. AI’s transformative potential also revolves around its self-learning knowledge and ability to take aspects of human intelligence and mimic them.

Together, these factors give AI tremendous power. From predicting disease outbreaks to detecting fraud in milliseconds, the models shaping humanity are learning faster, reasoning deeper, and creating logic that once took only humans to imagine.

Why AI models are the backbone of modern business

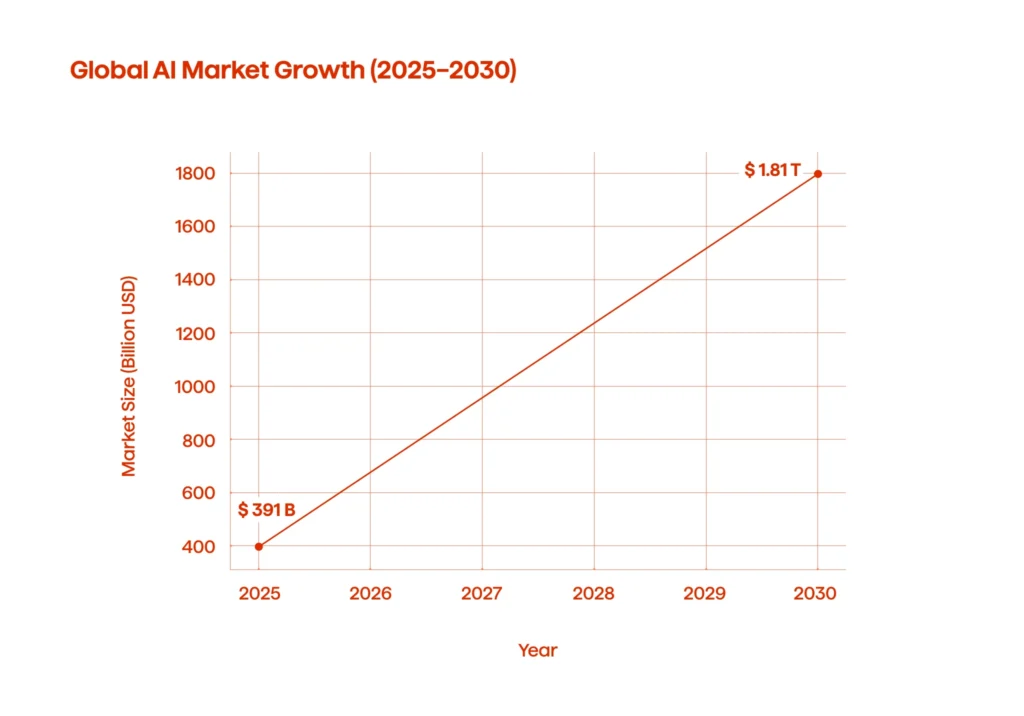

Global AI market is forecast to increase from $391 billion in 2025 to $1.81 trillion by 2030. This growth is attributed to the demand for more sophisticated machine learning models, algorithms, hardware changes, and the ever-increasing number of business AI applications and use cases across different sectors.

The market spike represents a transformation in how businesses are organized and operated. Companies no longer treat AI as an experiment; they’re fast-tracking AI systems into everyday operations. The new models that put data into actionable intelligence are at the crux of this move. The models range from supervised learning classifiers estimating credit risk in finance to deep learning neural networks identifying micro-fractures across industrial equipment. They are the decision engines of modern data science workflows, providing the first inputs to AI pipelines and computing resources to keep up with demand for real-time data.

In healthcare, models can process medical images so quickly that they can diagnose in minutes what would take days for a human team. In retail, algorithms personalize the experience of millions of users at the same time, changing anonymous browsing patterns to loyalty. In manufacturing, reinforcement learning models hone robotics on the factory floor, increasing throughput and lowering cycle time.

This is the reason many businesses are investing in models. Without models to optimize it, a larger farm of servers is simply an expensive data warehouse. Models turn all that potential processing power into actual value for an organization, allowing for faster and better decision making, adaptability to changes in the market, and experiences that feel intuitive and intelligent like the human brain.

Long story short, here’s the list of the most critical reasons why organizations adopt popular AI models like never before:

- From data to decisions. AI models convert excessive amounts of data into usable information that allows businesses to make faster and better-informed decisions than traditional analysis methods could offer.

- Scalability across sectors. Unlike traditional AI models built for narrow industry applications — such as healthcare diagnostics or financial fraud detection — modern general-purpose models like GPT, CLIP, SAM, and Whisper provide flexible foundations. They can understand language, vision, or speech and be fine-tuned to accelerate innovation across diverse sectors, from automotive to healthcare to finance.

- Greater automation. These models are embedded into AI systems and within AI pipelines, automating many complex tasks, reducing costs, speeding up verification times, and increasing accuracy.

- Real-time adjustments. With enough computational power, models can work with streaming data and provide or pre-emptively respond to market conditions or changing customer preferences in real-time.

- Innovation and competitive advantages. Companies that adopt modern AI models often uncover potential opportunities to create products, improve supply-chain efficiencies, and provide personalized experiences that their competitors cannot match.

- Simulation of human intelligence. Different AI models (mainly deep learning and reinforcement learning) will simulate parts of human intelligence, allowing firms to make decisions where human involvement has historically been required.

Key types of AI models and how they work

Artificial Intelligence is not a singular concept; instead, it refers to a suite of methods designed to solve a panoply of problems.

The main types of AI models include machine learning (ML), which encompasses supervised, unsupervised, and reinforcement learning approaches; deep learning (DL), a subset of ML that uses multi-layer neural networks such as CNNs, RNNs, and Transformers to handle complex data; generative AI, including GANs, VAEs, and LLMs, which create new content across text, images, audio, or code; rule-based AI, which relies on predefined logic rather than learned patterns; and hybrid or multimodal models, which combine different AI techniques to address more complex, cross-domain problems.

Here is a detailed classification of AI models based on their approaches, architectures, learning paradigms, and capabilities:

1. Classification by fundamental approach

1.1. Rule-based (symbolic AI)

These systems operate on explicitly programmed logic and predefined rules. They follow deterministic decision trees created by human experts. Performance is predictable and transparent, but it requires manual updates when business logic changes.

1.2 Machine learning-based

In this case, models learn patterns directly from data without explicit programming. They adapt and improve through exposure to examples. Performance scales with data quality and quantity. They can handle complex, non-linear relationships, but require substantial training data and computational resources.

1.3 Hybrid (combines both)

Hybrid models integrate rule-based logic with machine learning capabilities. They leverage the transparency of symbolic reasoning alongside the adaptability of learning algorithms. This approach balances interpretability with performance.

| Model approach | How it learns | Key algorithms | Common use cases |

|---|---|---|---|

| Rule-based (Symbolic AI) | Programmed with explicit rules and logic by human experts. | Expert systems, decision trees (rule-based), inference engines | Compliance checking, automated approval workflows |

| Machine learning-based | Learns patterns automatically from training data. | Neural networks, random forests, support vector machines | Image recognition, predictive analytics, recommendation systems |

| Hybrid | Integrates predefined rules with data-driven learning. | Neuro-symbolic systems, knowledge-enhanced neural networks | Medical diagnosis, fraud detection with compliance rules |

2. Classification by model architectures

2.1 Classical machine learning models (decision trees, SVMs, gradient boosting)

Models like this work effectively with structured, tabular data. They require less computational power than deep learning approaches. Feature engineering plays a critical role in their performance.

Decision trees offer an intuitive visualization of decision pathways. Support vector machines excel at binary classification tasks. Gradient boosting combines multiple weak models to generate predictions. These architectures remain industry standards for many business applications due to their efficiency and interpretability.

2.2 Deep learning models (neural networks, CNN, Transformer, GAN, etc.)

Subset of machine learning, neural network architectures (CNNs, RNNs, Transformers). Deep learning is Artificial Intelligence designed to process large data sets and complex patterns. Instead of using hand-crafted features, these models learn representationally via layers of neural networks. Image recognition, voice, and speech recognition all use deep learning.

For example, transformers and recurrent neural networks (RNNs) rule text and sequence modeling, while convolutional neural networks (CNNs) are best at image tasks. The ability to extract meaning from unstructured data, such as text, audio, and images, encapsulates the potential of deep learning. Medical professionals employ deep learning to spot abnormalities in radiology scans faster and more accurately.

| Model architecture | How it learns | Key algorithms | Common use cases |

|---|---|---|---|

| Classical machine learning models | Learns from structured data using statistical methods and mathematical optimization. | Decision trees, SVMs, gradient boosting | Credit scoring, tabular data analysis, risk assessment |

| Deep learning models | Uses multi-layer neural networks to extract features from raw input data. | Neural networks, CNN, Transformer, GAN | Computer vision, speech recognition, language translation |

3. Classification by learning paradigms (how models learn from data) if ML-based

3.1 Supervised learning models

Using supervised learning is similar to using an answer key when teaching. A labeled dataset, such as collections of email messages marked as spam or non-spam, is what a data scientist provides to the algorithm. The labeled dataset is analyzed by the model, which then applies to the patterns that distinguish the two classes from a fresh, unlabeled data stream or possible input dataset.

Supervised learning can deliver high accuracy on well-labeled datasets and is often relatively quick to implement in business settings. However, it relies heavily on large, high-quality labeled data and can struggle with unseen or evolving patterns. It may require significant effort and cost to maintain and scale. Gradient boosting, support vector machines, and decision trees are arguably the most popular supervised learning methods.

3.2 Unsupervised learning models

Sometimes you will have a lot of data that needs to be organized or interpreted. This is where unsupervised learning comes in. Apart from the hidden structure, the data is sorted or downsized by the ML model without any previous observations.

Unsupervised groupings, like clustering algorithms (e.g., k-means) or principal component analysis (PCA), can be used to find relationships a human might overlook. To help them distribute and target deals, retailers, for example, employ unsupervised learning to create a common segment for their customers. Finding hidden patterns will give a company a competitive advantage, especially if data labeling is too costly or time-consuming.

3.3 Reinforcement learning models

Reinforcement learning is the method of trial and error, rather than learning from labeled examples. In reinforcement learning, the model, also known as an “agent”, acts in an environment based on an action selected, and is then ‘rewarded’ or ‘penalized’ based on the outcome. The agent discovers which actions/rules give them maximum reward over time.

This method is beneficial for problems with no ‘correct’ answer, and only goals. Logistics companies like UPS utilize reinforcement learning methods to optimize their delivery routes (in real time), accounting for factors such as changed delivery locations, traffic, and weather. Reinforcement learning can also be used in game-playing AI, such as AlphaGo, which beat human world champions by investigating over 10 million possible outcomes that no human could ever adjust to in real time.

| Learning paradigm | How it learns | Key algorithms | Common use cases |

|---|---|---|---|

| Supervised learning | From a labeled dataset with known outputs | Decision trees, SVM, linear regression | Fraud detection, credit scoring, demand forecasting |

| Unsupervised learning | Finds hidden patterns in unlabeled data | K-means, PCA, hierarchical clustering | Customer segmentation, anomaly detection, recommendation systems |

| Reinforcement learning | By interacting and receiving rewards/penalties | Q-learning, policy gradients | Route optimization, dynamic pricing, robotics control |

4. Classification by model capability

4.1 Generative AI models (creates content)

Generative AI can generate text, images, video, and even synthetic data that seems unbelievably real.

Generative AI models do this by finding the statistical patterns in the enormous datasets available and then creating new examples that share those same patterns of similar appearance. A generative model trained in product designs could suggest new shapes beyond what engineers might invent on their own. Or, language models like GPT could generate human-like text and conversation, powering chatbots and content engines across industries.

What’s the advantage? Creative automation at scale, including personalized marketing text, AI-generated product prototypes, or drug compounds designed in silico before they hit the lab.

4.2 Discriminative (classifies/predicts)

These models focus on classification and prediction tasks. They analyze input data to assign labels or forecast outcomes. Discriminative models learn decision boundaries between categories and are well-suited for pattern recognition and anomaly detection. Common applications include fraud detection, medical diagnosis, and customer segmentation.

| Model | How it learns | Key algorithms | Common use cases |

|---|---|---|---|

| Generative AI | Learns patterns from vast, often unlabeled, datasets. | GANs, VAEs, and LLMs | Content creation, data analysis, document processing |

| Discriminative AI | Creates decision boundaries between categories to classify inputs or predict outcomes. | Logistic regression, support vector machines, random forests, CNN | Spam detection, fraud detection, medical diagnosis |

Choosing the right AI model for your business

When choosing among the most common AI models, the most critical step is to establish what type of problem you wish to solve: either prediction, classification, pattern discovery, or creation.

For example, a bank wanting to reduce fraud may use a supervised method, such as a decision tree or random forest, and train on labeled historical transactions. Those models will flag suspicious activity in milliseconds. Alternatively, retailers may be looking to understand specific customer segments. They chose an unsupervised model that would group the purchasers based on the purchasing patterns but would not have any predefined labels.

For pattern discovery when the data has no existing labels, clustering algorithms such as K-Means or DBSCAN are great options. These unsupervised learning models automatically find inherent groupings or structures within the data. For instance, a telecommunications company might use clustering to identify network usage patterns that signal the need for maintenance before a failure occurs.

Finally, if the goal is creation, such as generating realistic images, writing human-quality text, or designing new drug molecules, a generative AI model is necessary. These models learn the underlying distribution of the input data to produce entirely new, yet plausible, outputs.

Generative AI models are making their way into creative fields. Nike, for example, has done some deep dives into generative footwear design via AI, focusing on neural networks to tap into design aesthetics beyond any human designer’s scope for the design process. However, selecting the model is only the starting point.

Overcoming challenges in AI adoption

Implementing AI models can be complicated. One of the most challenging problems to contend with is data quality. Companies routinely collect tremendous amounts of data; however, much of it is untidy, incomplete, or biased, which yields poor predictions. Clear AI pipelines that clean, label, and refresh datasets on special events matter; it makes a difference, as your models are continuously predicting in the wild, and you want to have their predictions as accurate as possible.

The computational resources needed for model training pose another problem. Specific hardware (GPUs or cloud-based infrastructure) is required for most complex models, including deep learning and generative AI. Without conducting a thorough analysis, organizations that try to predict their computing demands frequently run into issues like restricted scalability and delays.

Talent shortages create friction, too. Good ML/AI engineers are hard to find, while the competition is staggering. Lastly, models must continue to be explainable and ethical. Neural networks are complex and look like a black box; it is often difficult to justify decisions to regulators and customers. However, companies are increasingly adopting explainable AI methods and other fairness audits to maintain trust in AI.

The future of Artificial Intelligence models in business

The next generation of AI models will further alter our interactions with machines by incorporating entirely new levels of creativity and human-like reasoning. According to Accenture, companies that use sophisticated AI models should see an average 38% boost in profitability by 2030. This is a competitive leap, not merely a knock on the door alteration.

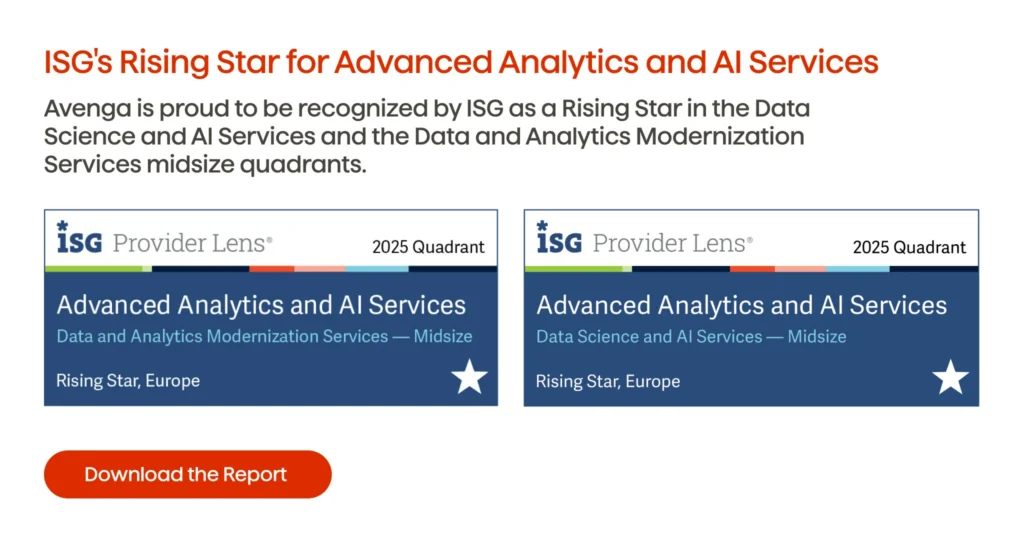

The future belongs to companies using AI models as structural drivers of performance and expansion rather than experimental innovations. Want to learn more about different types of AI models? Contact Avenga, your trusted technology partner.