Energy Efficient Software Development AKA Green Development

April 24, 2025 15 min read

How Can Developers Contribute to the Goal of Sustainability?

In the global effort towards sustainable development, the discussion includes how developers can create better software, which means a greener software on top of all the existing requirements.

Avenga takes sustainability very seriously in its strategy.

→ Explore Sustainability with Avenga

This time let’s take a look at the less popular aspect of green software development.

As Humans

Developers can contribute to the global effort of carbon neutrality by following the best practices and behaviors as humans like leaving their cars at home, planting trees, using less water, etc.

As Users of Computers

As computer users, developers can also follow all the good energy efficiency tips. For instance, using power consumption optimization features on their workstations (automatic suspensions of displays, using balanced CPU profiles, etc.), not leaving their test servers on when not needed, and not building an entire system every time but just the modules/microservices they need (inefficient or overused build systems are CPU, IO, and power and as a result … CO2 hogs).

Of course there are people mining crypto on their corporate laptops and servers, etc. so this is just a friendly reminder, because that one hour may take more power than an entire day of development. And, that should be enough of a reason to stop it, besides violating corporate policies.

But, there’s much more that they (we) can do as software designers and coders.

As Developers

The next part of this article reviews the different aspects of software development and deployment (which as we all well know are bound together and should not be disconnected).

This list is an overview and this area is not necessarily new because power optimizations of computing is as old as computing itself, but I want to focus here on the enterprise app development perspective.

Client Side – Mobile and Web Applications

Mobile Apps

Mobile developers are familiar with the requirements to design and implement applications that follow best practices for battery savings.

Some of them are hard requirements imposed by the application stores, while the rest is part of the customer experience. Customers/users do not want to use mobile apps that consume their battery more than is necessary in order to perform their functions.

Infamous overnight battery drains and background wake-ups of smartphones are something that has to be avoided.

The same applies for saving the battery on AMOLED screens by using darker themes or color, but still there are many apps which do not follow dark mode system settings. The memory consumption of white versus black (or very dark) backgrounds varies by tens or even hundreds of percent.

And the more the phone battery is used by the apps, the more often the users will have to charge it and consume electrical power.

Web Apps

Web apps are also mobile apps. Yes, they are, but so often this aspect is omitted by their creators. Even though more than half of the users will access them from mobile devices, battery optimizations are often not even in the TOP 10 priorities when developing them.

Plus, regular laptop users want their batteries to last longer and there’s so much that web designers and developers can do in this regard.

Unnecessary animations, heavy scripts, strange frameworks on the client side when static web pages would be sufficient, wrong caching techniques invoking unnecessary CPU cycles and network IO calls, unoptimized stylesheets running on a CPU instead of more optimal GPU, etc. . . . the list goes on and on.

You can measure the estimated environment impact using tools available online such as https://www.websitecarbon.com/ .

Fortunately, usually what works for the user experience also works for energy efficiency.

However, there are situations where painful trade-offs will have to be made. For instance, the pulling frequency to update background data. Users want it as soon as possible, but from a battery consumption perspective it should be done as rarely as possible. Of course, there are modern techniques such as push notifications or webRT that reduce the number of pulling requests required. But still – both requirements are sometimes contradictory.

IoT / IoE

Battery operated devices are optimized on multiple levels. This includes more efficient dedicated operating systems, low level software and more energy efficient protocol (Zigbee instead of WiFi for instance).

IoT veterans still try to avoid Linux based devices on common hardware as it is much less efficient than dedicated devices with a much lower power consumption.

Usually it’s all connected. Devices requiring less power also require less CPU power, less network bandwidth and less memory. However, more advanced devices, or let’s say it openly, ‘more friendly to more developers’, are gaining popularity at the expense of these optimizations.

The question of devices requiring more power when connected seems to be less important than battery operated devices when it affects the maintenance of them significantly. For example, the need to replace batteries once per year versus once per month is a totally different experience both for users and IT operations.

It all affects the environmental footprint of those devices and of entire IoT ecosystems.

Of course, one may rightfully notice that so many devices means much more environmental waste, which is also an important thing to consider from a sustainability point of view.

Server Side

Moore’s Law

Moore’s law in its original form does not work anymore, as the speed increase of silicon CPUs has not been doubling every 18 months for a long time. Physics limits the speed of development of faster chips as fast as it was possible before.

Therefore, completely new paradigms are emerging such as quantum computing or neuromorphic computing.

Yet, in the department of Thermal Design Power (TDP) of binary CPUs, we are benefiting from great achievements even though the CPUs are not getting faster at the speed we used to see before, but they are getting more and more power efficient.

This came because of the requirement for more and more powerful laptops with longer battery lifes and more efficient data centers, both on-premise and in the cloud.

ARM vs. Intel?

Obviously, we also witnessed a revolutionary move from Apple in 2020, as they ditched Intel CPUs and the x86/arm64 architecture, and embraced their ARM-based M1 chips which are known for their speed, but should be even more famous for their power efficiency; sometimes orders of magnitude are better than their Intel counterparts.

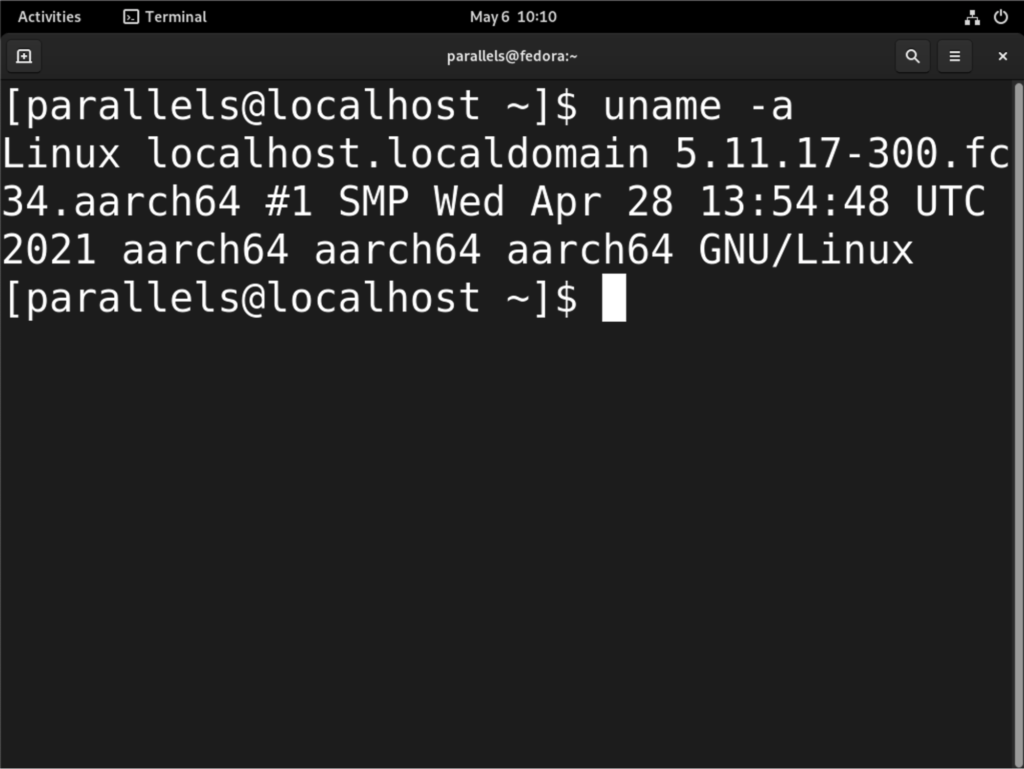

Fedora Linux arm-64 running on Mac M1 with Parallels

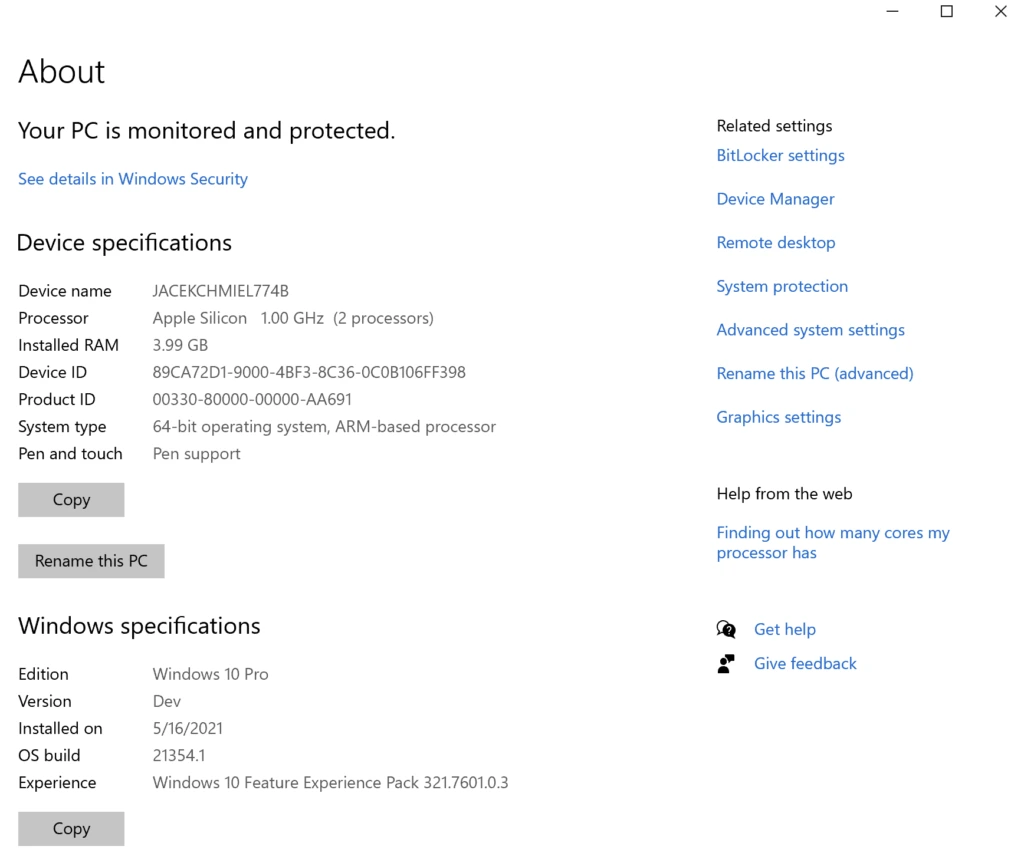

Microsoft created the arm64 version of their Windows 10 operating system which is supposed to be available on more and more Windows-based laptops in the near future. The initial reviews weren’t very positive, but that was just the first step.

(Screen of Windows 10 64 bit ARM, notice the Dev moniker; it’s not a stable version of OS)\

Multi-arch Containers

The same thing has started to happen on the server side. And, it means ARM chips in server rooms and cloud providers. To make them more approachable to consumers, there are Docker containers with arm64 images of the most popular technologies and frameworks. Building and choosing multi-architecture containers is not just a question of fashion, as some claim, but can enable enormous power savings, which is great both for the environment (carbon footprint) and for cost savings.

Sometimes there are questions asked, like ‘what is wrong with x86/amd64 as it is working just fine’? The first thing is the pace of performance improvements which are lacking compared to ARM and the second is power efficiency. How much better is it? According to AWS it’s at least 42% more efficient than x86/arm64.

So, the simple thing to do for DevOps is to use multi-arch containers and start the preparation for arm64 based future of cloud computing.

The adoption of distroless containers is another interesting trend to follow for multiple reasons, though less revolutionary it includes power consumption savings.

Cloud to the Rescue!

Cloud providers, all of them, claim that soon they will achieve their carbon neutrality and other energy efficiency goals with their sophisticated optimizations, cooling systems, electrical systems, etc. Plus, the already mentioned arm64 architectures of the future.

Cloud adoption is going on, but slower than anticipated, and one of the benefits of this shift in computing is going to be improved power efficiency and carbon neutrality. In case anyone needed another reason to transform faster, it is environmental.

→ Cloud transformation and the dilemmas of CIOs

Naturally a lot also depends on the configuration of cloud services, like scaling them down when not necessary (for instance Kubernetes pods shutdown when the demand is lower) or enabling automated power off / suspensions of services and servers that are not needed, for instance during the weekend. Fortunately, cloud providers are enabling those options and improving the automation of them.

This time around the motivation for IT teams is very simple as the environmental friendliness goes hand to hand with cloud expenses.

It is also worth considering cloud technologies strongly based on demand, such as serverless architectures which scale automatically with each function call.

Efficient and Inefficient Programming Languages

C and C++ are still used, not just for performance and low memory consumption, but also for energy efficiency with more computing power per Watt of consumed energy.

The new family of languages also delivers on that promise of energy saving, for example Rust is known for its power efficiency.

At Avenga, we are big fans of another popular statically compiled language – Go, in which our products (Wao.io and couper.io) are written.

The rule of thumb is that statically compiled languages are more energy efficient than interpreted languages, such as Python, which despite its popularity is infamous for its power consumption and inefficiency.

What is also ironic a bit is that in most of its real world applications, Python is used in data science and machine learning where it’s very often accompanied by powerful GPUs or TPUs running heavy machine learning workloads. So even if Python was replaced, by let’s say the Julia language, it wouldn’t help much with these types of applications.

After all, the more processing of data and logic that is done on the “Pythons-side”, the more it will hurt the environment. For example, complex drug interaction simulations are done in Julia for performance and power efficiency reasons.

The Java language and its runtime are somewhere in the middle, as it combines a great expression of power with a relatively high power efficiency, so Java developers cannot say they are the avant garde of green computing, but they are certainly not the worst in this regard.

Optimal Code ! = Energy Efficient Code

Isn’t it common sense to write more efficient code as a way to ensure energy efficiency?

Unfortunately, it’s not so easy.

Well optimized code, for instance, made parallel by using multiple threads and cores, will likely be less energy efficient as it will “wake up” more cores in the CPUs. The job will be completed faster, but the overall power consumption will be worse than in a single core.

There are patterns and antipatterns of green computing, depending upon the language and runtime, and this is just the beginning of the movement. There’s a lot that has yet to be determined. For now, the basic thing to remember is that it’s not as simple as running the code as fast as possible, which seems to be natural for many of us (I admit my share of the blame here).

Machine Learning

Machine learning is used in data centers and on local devices, such as smartphones, to optimize battery consumption. This is something worth remembering.

Nonetheless ML for enterprises is inevitably a set of experiments, trials, errors, refinements and improvements. This is fundamental in the machine learning world.

And ML means at least GPUs, the more powerful the better.

>>> link to ML workstation (gaming laptop)

>>> link to ML workstation (Linux)

One of the recommendations in this area is not to beat the dead horse, meaning when the model is clearly not converging that models adding more and more epochs is unlikely to help.

Whenever the problem seems to be in the data or its statistics, quality, or labelling, running the same workloads of training with different parameters may not lead to any acceptable results. You probably do not need a hundred training sessions warming up your GPUs to one hundred degrees Celsius to notice that problem.

If the data or algorithm related problems are visible early in the process, experienced data scientists will know it is pointless to run it again and again; miracles are not going to happen. But, the power consumption and CO2 footprint will be reduced even more than saving the wasted time of the data scientist team waiting for another set of results.

Another solution is to try and use cloud computing for ML which will then be executed on arguably more efficient cloud computing datacenters.

Distributed Digital Ledgers (DLTs) aka Blockchain for Business

Public blockchain is strongly associated with crypto currencies and recently with NTFs.

→ NFTs for digital art covered by Avenga Labs tech update

One of the key drawbacks of blockchain is that the current generation of the most popular algorithms are very energy intensive. In case not everybody is aware, let me just give the famous example of what would have happened if all the credit card transactions were to be run on blockchain. Humanity would use up the power of all the power plants combined and some even predict that it would be impossible to build enough nuclear reactors or that there’s not enough Uranium to make it happen.

Yes, it’s that bad from an environmental perspective.

The usual techniques to avoid this problem are in two main categories.

The first is to use these technologies for less transitions and only when they have clear benefits over traditional databases and messaging systems.

→ A case of a COVID-19 test results system in blockchain.

The other is to invest and look for newer technologies in this family which are more optimized from an energy usage perspective.

The problem is their maturity and popularity, as it’s not just a set of libraries for developers but also entire infrastructures/ecosystems which, from a business perspective, should have a predictable lifespan to be invested in.

Some argue that despite the progress and new frameworks based on other principles than BitCoin or Ethereum, they are still far behind in the energy efficiency department.

We can only hope energy efficient tools for building private and hybrid DLTs are going to be the norm in the near future.

→ Explore how to choose the blockchain for your business

Next Steps

Just Leave Us Alone?

One of the most frequent complaints about even mentioning greener development is the fact that software teams are already overwhelmed with change requests, performance optimization, security fixes, as well as compliance and process optimization in order to stay competitive.

Adding green computing goals on top of all that may seem artificial and unnecessary. But “times, they are changing”.

→ Read more about Strategic Sustainability: What’s inside the box?

I can imagine the futility of talking about environmental issues to happy new car owners in 1950s America would be met with even stronger resistance. Or people fascinated then with the emergence of nuclear power as the infinite source of energy. Now everybody understands how important ecology is for our survival as a species. Every car owner knows this, and despite many of them wanting faster cars, they support the idea of saving the climate for future generations.

→ Avenga sustainability initiatives

Mental Shift

But, the mentality will also change software projects for developers. It has already happened with the energy efficiency requirements for mobile applications and IoT systems because of the obvious benefits for the user and customer experience, but also for environmental goals. With overall computing, the benefits may or may not translate directly to user experience gains, but I hope this article opened your eyes to seeing that there’s so much to do and a lot of it can be done even today.

“Be the change you want to see in the world.”

by Mahatma Gandhi also applies to the software development world.